1. Introduction

The growth of user-generated content initiatives, the increase in open educational practices (OEPs), massive open online courses (MOOCs) and the creation of new self-learning solution providers such as the Open Educational Resources university (OERu), the Peer 2 Peer University (P2PU) or the University of the People (UoPeople) are transforming familiar scenarios into other domains of an uncertain nature. This trend poses a challenge to conventional institutions, especially universities (Sangrà, 2013).

Nowadays, the rapid increase in MOOCs is considered in the informative and scientific literature as a revolution with great potential in the educational and training world (Bouchard, 2011; Aguaded, Vázquez-Cano, & Sevillano, 2013). The Horizon Report, led by the New Media Consortium and Educause, brings a prospective study of the use of educational technologies and future trends in different countries. In its ninth edition (Johnson, Adams Becker, Cummins, Estrada, Freeman, & Ludgate, 2013), it especially highlights the impact of MOOCs on the current educational landscape. In addition, the Iberoamerican Edition Oriented To Higher Education, a joint initiative between the eLearn Center of the Open University of Catalonia (UOC) and the New Media Consortium, indicates that MOOCs will be introduced in our institutions of higher education in four to five years (Durall, Gros, Maina, Johnson, & Adams, 2012).

According to McAuley, Stewart, Siemens, and Cormier (2010a), the development of a MOOC raises a number of pedagogical questions:

- To what extent can they promote in-depth research and the creation of sophisticated knowledge?

- How to articulate the breadth versus the depth of student involvement and participation, which may extend beyond those with broadband Internet access and advanced skills in the use of social networks?

- How to identify processes and practices that might motivate relatively passive users to become more active or adopt more participatory roles?

Moreover, specific strategies should be used to optimise the contribution of teachers and advanced participants.

However, MOOCs are used by many educational organisations without ensuring compliance with minimum quality standards required by participants. In that respect, users of distance learning must be able to select educational courses that best suit their needs and expectations, and educational organisations must improve their offerings to better satisfy their students.

A descriptive comparative analysis of the assessment tools for online courses will produce new scenarios that will help to design higher quality and more efficient tools. These new elements will enable any gap between the participants’ expectations and their level of satisfaction to be narrowed. Therefore, the wide range of e-learning will gain in reliability and credibility, which will mitigate the risk of user dropout and will provide online courses with guaranteed higher quality parameters.

In this article, the bases of these new instruments will be designed from the comparative analysis between Standard UNE 66181:2012 (quality management of e-learning) and the analytical tool of teaching models and strategies for undergraduate online courses (ADECUR).

2. Theories used

2.1. Pedagogical design of MOOCs

In the informative and scientific literature, MOOCs have been considered a revolution with great potential in the educational and training world (Vázquez-Cano, López-Meneses, & Sarasola, 2013b). Many formative courses with the seal of prestigious universities worldwide are increasingly grouped under this concept. Therefore, an understanding of the pedagogical development of these courses is crucial for students and future developers. A good educational philosophy and an adequate architecture for participation will promote a better development for the acquisition of skills by students (Vázquez et al., 2013a).

According to McAuley, Stewart, Siemens, and Cormier (2010b), the fundamental characteristics of MOOCs are: free access without any limit on the number of participants, lack of certification for free access participants, instructional design based on audiovisuals supported by written text, and the collaborative and participatory methodology of the student with minimal intervention from the teacher.

The open nature of the carriers of knowledge or learning resources are in a context where what matters is the matrix of the knowledge (Zapata-Ros, 2012): the procedures for developing knowledge in groups and in individuals. Thus, in MOOCs, which are not purely connectivist, students often encounter a fairly routine pattern in almost all universities and institutions. Therefore, the model of almost every MOOC follows a similar structure (Vázquez et al., 2013a.), that is to say, main page, development page and elements of participation and collaboration. These authors suggest that the design must be attractive and capable of generating competences, and that it must fulfil a number of objectives in a knowledge area or professional field. Moreover, the platforms should offer different possibilities related to 2.0 social participation tools such as blogs, wikis, forums, microblogs, etc.

2.2. ADECUR assessment tool

ADECUR is an assessment tool capable of analysing and identifying the defining features of teaching quality in online courses from the scales provided by the socio-constructivist and research paradigm. It is a way to promote the proper development of educational innovation processes (Cabero & López, 2009).

This instrument, registered with the Spanish Patent and Trademark Office (dossier number in force: 2,855,153), is the result of the doctoral thesis entitled “Analysis of teaching models and teaching strategies in Tele-training: design and testing of an instrument for assessing teaching strategies of telematic undergraduate courses” (López, 2008). This tool has two main dimensions:

1. Psycho-educational dimension. It consists of six axes of progression: the virtual environment, the type of learning that it promotes, the objectives, content, activities, sequencing, assessment and tutoring.

2. Technical aspect dimension. It consists of an axis of progression: resources and technical aspects.

Additionally, the tool has some didactic elements listed as components of the axes of educational progression. Thus, a higher level of information is obtained in the analysis of models and teaching strategies.

The instrument consists of 115 items. Each item has one or more criteria to respond to one of two options only: “1” if the statement is met, or “0” if it is not. The teaching tool emerging from this research may be very interesting for education professionals and experts in the field of MOOCs.

This study initiates innovation and research on the assessment of the quality of MOOCs. Online training requires the establishment of pedagogical models designed to promote a learning process, which combines flexibility with programming and well-structured planning. All of this is combined with the establishment of open lines of communication and exchange in the virtual classroom, which facilitate the creation of environments. It promotes the construction of knowledge adapted to the particular needs of each participant.

In that respect, an approach to what is shared and participatory among the teaching and learning group is required (Mercader & Bartolomé, 2006). In addition, this approach evaluates these virtual environments to learn and reflect upon their social and educational implications. Moreover, the research undertaken makes a significant contribution to the innovation and evaluation of the teaching curriculum to provide a tool for evaluating hypermedia materials of an educational and technological nature.

2.3. Standard UNE 66181: 2012 on quality management of e-learning

In recent years, there has been a remarkable development of the e-learning phenomenon, facilitated by globalisation and the development of Information and Communication Technology (ICT), which has helped to improve and expand the existing educational offering.

This type of training is used by many organisations to comply with paragraph 6.2 of Standard UNE-EN ISO 9001 on quality management systems, to “provide the necessary standards for their employees and guarantee their competence.” In this respect, it is necessary “to ensure that the acquired e-learning meets specified purchase requirements” according to section 7.4 of this Standard.

Therefore, Standard UNE 66181: 2012 is intended to serve as a guide to identify the characteristics of e-learning programmes. Users may select online courses that best suit their needs and expectations, and educational organisations may improve their offering, thereby satisfying their students. In this respect, the dimensions comprising the satisfaction factors of e-learning are: employability, teaching methodology and accessibility.

Information about quality levels is expressed according to a system of representation of cumulative stars, where one star is the lowest level and five stars is the highest level. Thus, the level attained in each dimension is represented by an equal number (1 to 5) of black (or filled) stars, which build up from the left until all five are attained. Furthermore, the quality levels of this standard are cumulative, thus each level is also the sum of the content of the previous levels.

However, these headings were adapted to a tool that can easily measure courses with quality indicators. That is to say, a MOOC could include indicators of different levels of quality rubrics without being cumulative. In fact, each quality standard may be evaluated and does not have to contain the sum of the indicators from previous levels.

3. Study and analysis of the research scenario

The study presented belongs to the line of work initiated in teaching research Innovation 2.0 Information and Communication Technology in the European Higher Education Area, located in the framework of Action 2 projects funded by Educational Innovation and Development in the Department of Teaching and European Convergence at the Pablo de Olavide University, Seville, Spain, and developed at the Laboratory for Computational Intelligence, under the direction of Professor Salmerón.

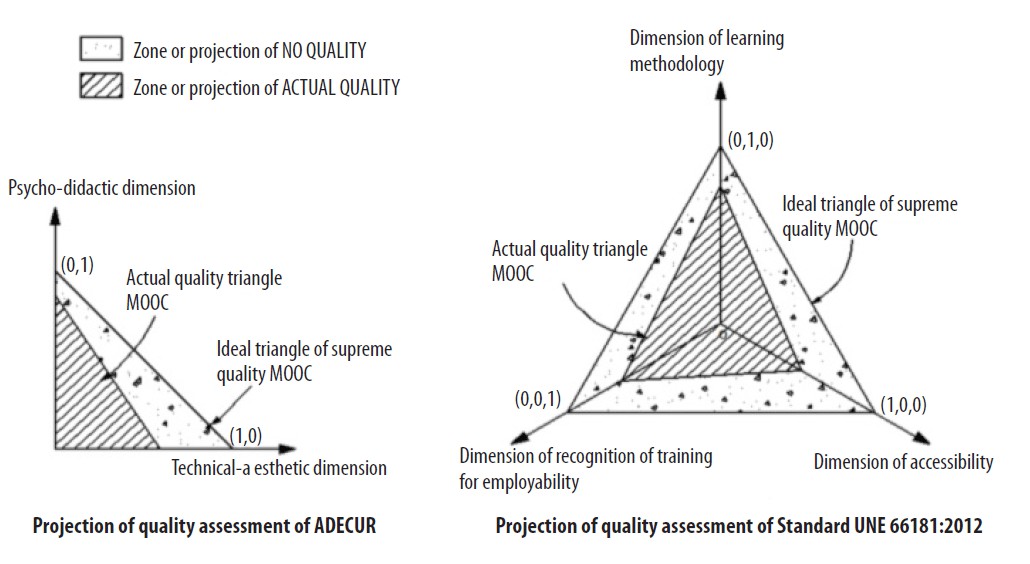

Figure 1 shows the representation of MOOCs in two quality triangles. On the one hand, the orthic ideal triangle of supreme quality MOOC, showing an equilateral triangle (for all three dimensions of the UNE Standard) or rectangle (for the two dimensions of the ADECUR instrument), with the highest scores in all the quality dimensions (axes cut in point 1 of them). This ideal triangle gets the orthic adjective because it is a high quality projection surface and serves as a benchmark for measuring the “lack of quality” of MOOCs. In this regard, the actual quality triangle of any MOOC (hatched area) has also been represented in the two instruments, intersecting with the above-mentioned axis at points below 1.

Figure 1. Representation of isometric triangles of quality of the instruments analysed. Source: original content

3.1. Comparative between the quality assessment instruments ADECUR and Standard UNE 66181: 2012

In this study, the common and different indicators of the two assessment tools will be discussed. Thus, it is intended to conduct an internal analysis between ADECUR and the UNE Standard to establish the real situation of the two instruments, as well as the risks and opportunities of their use in the evaluation of online courses.

3.1.1. Analysis of common indicators

We have used the analysis of the common indicators of the quality evaluation subfactors of ADECUR and Standard UNE 66181:2012 as a premise, according to the dimensions of the Standard. Therefore, Table 1 only shows the common quality indicators of the dimension “Learning Methodology”, since there is no other dimension with common indicators.

|

Dimension: Learning Methodology |

Indicators |

|---|---|

|

Subfactor 2.1 Didactic instructional design |

Entails general objectives |

|

Entails general learning objectives |

|

|

Entails specific learning objectives |

|

|

Entails a method of learning and identifiable activities |

|

|

Knowledge assessment is made at the end of the course |

|

|

Activities and problems develop in a realistic context |

|

|

Some degree of freedom is allowed in the training schedule |

|

|

There is an initial evaluation that provides information about learning needs and, after the final evaluation, the lessons learned during the course |

|

|

The learning methodology is based on performing troubleshooting or doing real projects with direct involvement in society |

|

|

Subfactor 2.2 Training resources and learning activities |

The training resources are only reference material for self-study |

|

The training resources allow student interaction |

|

|

Students may engage in self-assessment |

|

|

Instructions are provided for the use of training resources |

|

|

Students must conduct individual and group practical activities |

|

|

A teaching guide is provided with information about the course |

|

|

There is variety in the training resources and different interaction models |

|

|

Complex individual and group practical activities are proposed |

|

|

Synchronous sessions are scheduled by the trainer |

|

|

Knowledge management is facilitated |

|

|

Subfactor 2.3 Tutorial |

The course tutors respond to student questions without a pre-set time |

|

Answers to questions about the course content are given in a pre-set time |

|

|

Tutors keep track of learning |

|

|

The students’ progress in relation to pre-defined learning indicators is considered |

|

|

Personalised learning and individual tracking is done |

|

|

Subfactor 2.4 Technological and digital learning environment |

Provides information on hardware and software requirements |

|

At least some asynchronous communication tools are available |

|

|

There is a digital technology learning environment that integrates content and communication |

|

|

Includes a section of frequently asked questions and / or help |

|

|

Enables or has mechanisms or components that facilitate student orientation within the environment and the learning process |

3.1.2. Analysis of non-common indicators

An internal analysis of non-common indicators will highlight certain weaknesses of the instrument that does not contain them. As a result, these aspects limit the reach of the evaluation tool of any MOOC. However, the instrument that does contain these non-common indicators will have certain strengths, which are advantageous in terms of the dimensional scope of the assessment, as shown in Table 2.

|

STANDARD UNE 66181:2012 |

|

|---|---|

|

Dimension 1: Recognition of training for employability |

Indicators |

|

Subfactor 1.1 Recognition of training for employability |

All |

|

Dimension 2: Learning Methodology |

Indicators |

|

Subfactor 2.1 Teaching-Instructional Design |

The learning objectives are organised by skills |

|

Monitoring post-course |

|

|

Subfactor 2.3 Tutorial |

Existence of a personalised programme of contacts |

|

Individual feedback is provided |

|

|

Individualised synchronous sessions are scheduled |

|

|

Subfactor 2.4 Technological and digital learning environment |

Enables groups of students and tasks to be managed via access logins and reports |

|

Resumes the learning process where it left off in the previous session |

|

|

Allows repositories for sharing digital files among its members |

|

|

Allows discussion forums and student support |

|

|

Allows visual indicators of learning progress |

|

|

Allows management and reuse of best practices |

|

|

Allows use of different presentation formats |

|

|

Allows collaborative technology or of active participation |

|

|

Dimension 3: Accessibility levels |

Indicators |

|

Subfactor 3.1 Accessibility hardware |

All |

|

Subfactor 3.2 Accessibility software |

All |

|

Subfactor 3.3 Accessibility web |

All |

|

ADECUR |

|

|

Dimension 1: Psycho-educational |

Indicators |

|

Subfactor 1.1 Virtual environment |

Powers a generally motivating context |

|

Promotes a caring and democratic environment |

|

|

Subfactor 1.2 Learning |

Provides different levels of initial knowledge |

|

Introduces resources that help relate the lessons learned from initial personal experiences |

|

|

Uses different procedures to facilitate and enhance understanding |

|

|

Boosts negotiation and sharing of meanings |

|

|

Subfactor 1.4 Content |

Proposes the use of different content as raw materials for the construction of learning |

|

Content arises in the context of each of the activities |

|

|

The documentary content is updated |

|

|

Prior knowledge is considered as content |

|

|

Allows external inquiries to external specialists from the online course |

|

|

The content is relevant |

|

|

The information and language used are suitable |

|

|

The formulation of content is appropriate to the construction process |

|

|

Facilitates and promotes access to conceptual, procedural and attitudinal content |

|

|

Promotes gradual access to content |

|

|

Subfactor 1.5 Activities and sequencing |

Includes activities to relate prior knowledge to new content |

|

Includes activities to insert knowledge within wider schemes |

|

|

Includes activities that facilitate communication and discussion of personal knowledge |

|

|

Includes activities to reflect on what they have learned, the processes followed and the difficulties faced |

|

|

Includes activities that promote decision making |

|

|

Includes activities that foster independent learning |

|

|

Includes activities that promote a research approach |

|

|

The activities are organised into coherent sequences with constructivist perspectives and research |

|

|

Subfactor 1.6 Evaluation and action |

Assessment is formative |

|

Includes assessment processes led by students |

|

|

Subfactor 1.7 Tutorial |

Includes personal realisation of different screening tests on learning outcomes |

|

Presents a virtual space for evaluation |

|

|

The initiation and development of the activities are oriented and energised |

|

|

A virtual dynamic element that acts as a guide is incorporated |

|

|

Dimension 2: Technical-aesthetic |

Indicators |

|

Subfactor 2.1 Resources and technical aspects |

Retrieval of information is provided |

|

Is easy to use |

|

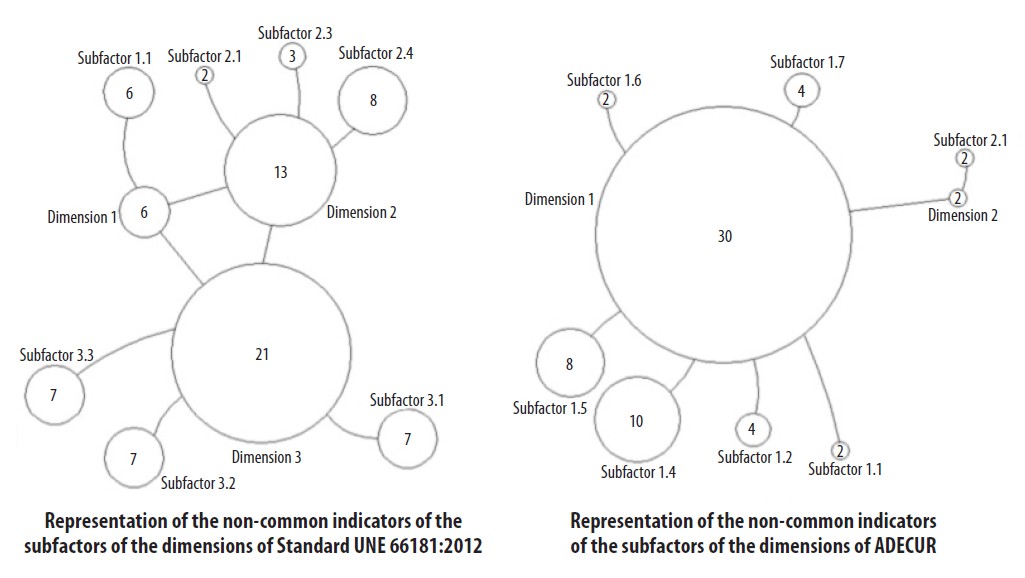

Figure 2 graphically represents the strengths of Standard UNE 66181:2012 and the ADECUR instrument. To do this, we symbolise the dimensions of the instruments as intertwined nodes of different sizes. In turn, each dimension is connected to its component sub-factor. This way, we can represent the strength of each tool as a dimension map and non-common subfactors. The number within the node of each subfactor represents the non-common indicators of the tool that make it up, and it is proportional to its own size. Moreover, the number within the node of each dimension represents the non-common indicators of all sub-factors that make it up, and it is also proportional to its size.

Figure 2. Graphical representation of the strengths of the instruments analysed. Source: original content

In this respect, it can be inferred that Standard UNE 66181: 2012 has 6 non-common indicators of dimension 1, 13 of dimension 2 and 21 of dimension 3. As for the ADECUR tool, it has 30 non-common indicators of dimensions 1 and 2.

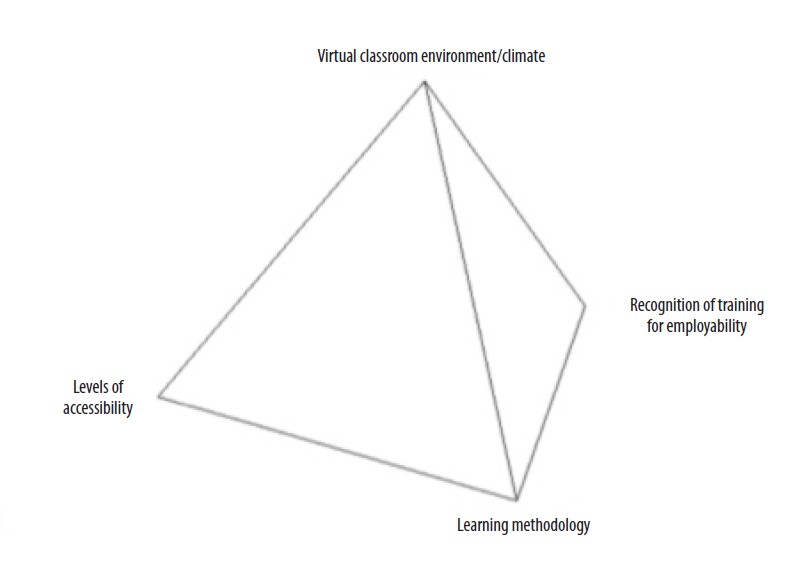

3.2. Design of new tools for evaluating the quality of MOOCs

This study proposes some guidelines or bases for the configuration of a new instrument that obviates the deficiencies yet includes the strengths of the two instruments described above. The new tool should therefore consist of four dimensions: recognition of training for employability, learning methodology, levels of accessibility and virtual classroom environment/climate. To the three dimensions of Standard UNE 66181: 2012, we will add the non-common indicators of the ADECUR dimensions. Thus, a fourth didactic progression dimension is added, “Virtual classroom environment/climate” from the ADECUR instrument, which does not have any non-common measure with Standard UNE 66181:2012, and this entails a new and efficient key factor in shaping new tools. In Figure 3, this construct design is represented as tetrahedral dimensions of future tools for evaluating the quality of MOOCs.

Figure 3. Representation of the tetrahedral dimensions of the new instruments for the quality assessment of MOOCs. Source: original content

Based on the above, Table 3 shows the configuration of the new tools for assessing the quality of MOOCs. These instruments should contemplate a platform of common quality indicators (Table 1), the four tetrahedral dimensions (Figure 3) and sub-factors or axes of progression of non-common indicators (Table 2).

|

COMMON INDICATORS OF QUALITY |

|||

|---|---|---|---|

|

Dimension: Learning methods (ADECUR tools and UNE) |

|||

|

Subfactor 2.1 |

Subfactor 2.2 |

Subfactor 2.3 |

Subfactor 2.4 |

|

TETRAHEDRAL DIMENSIONS |

|||

|

Dimension 1: Recognition of training for employability |

Dimension 2: Learning methodology |

Dimension 3: Levels of accessibility |

Dimension 4: Virtual classroom environment/climate |

|

Subfactor 1.1 (all indicators) |

Subfactor 2.1 |

Subfactor 3.1 |

Subfactor 4.1 (all indicators) |

|

Subfactor 2.3 |

Subfactor 3.2 |

||

|

Subfactor 2.4 |

Subfactor 3.3 |

||

|

UNE Standard |

ADECUR and UNE Standard |

UNE Standard |

ADECUR |

4. Discussion and conclusions

This study reduces the differences within the evaluation of the educational action of MOOCs between Standard UNE 66181: 2012 and the indicators of the ADECUR tool, through a new analytical and visual tool that minimises the weaknesses of the two instruments analysed. Thus, a design of new instruments that takes into account all of the indicators of the dimensions needs more research efforts.

Moreover, the platforms that supply certified MOOCs could be accredited, thus avoiding the provision of educational actions with inappropriate methodologies (Valverde, 2014). Furthermore, it would prevent, as far as possible, the trend towards the standardisation of knowledge and its serious drawbacks, and address individual differences due to overcrowding. It should be noted that overcrowding leads to a unidirectional-communication, teacher-centred and content-based design. Thus, MOOCs could be shown as the democratisation of higher education, with pedagogical interests that take precedence over economic ones.

In any case, the assessment of the quality of MOOCs is an emerging research field. In this respect, we estimate the need for more studies on certain indicators of quality assessment of online courses, as well as longitudinal (Stödberg, 2012) or comparative studies (Balfour, 2013). And, more specifically, to continue researching into methods that improve student assessments (reliability, validity, authenticity and safety), effective automated assessment, immediate feedback systems, and a better guarantee of usability (Oncu & Cakir, 2011).